EMPATHYbuild

VR experience: testing the effectiveness of the metaverse and virtual reality in elevating user-centered design

Company

SAP

Tags

EMPATHYbuild is a master's thesis project as part of my MA degree in Expanded Realities at the Darmstadt University of Applied Sciences. My master's thesis is written in collaboration with the SAP Innovation Center, where I was under a thesis student employment contract at the time.

I led the thesis delivery process from research, design, and project management perspective throughout the whole cycle, involving research, conception, prototyping, testing, and evaluation. For the prototype implementation, I worked alongside two engineers from my team at SAP, who helped me with parts of experience production in Unreal Engine, interaction backend, and speech recognition system, collaborating in a sprint setting.

About

The EMPATHYbuild project aimed to conceptualize, prototype, and test a VR experience, simulating a conversation between the user, who takes on the role of a designer or developer, and a User Persona. The User Persona is transformed from a textual 2D format into a full-fledged 3D MetaHuman character. The research project revolves around the central research question:

"To what extent can the Metaverse and Virtual Reality facilitate the creation of user personas, elevate empathy, and ultimately improve user-centered design?"

This project, while initially inspired by popular culture and prior experiences in the VR/MR medium, also heavily incorporates relevant academic research. With the primary goal of enhancing empathy during design processes, this experience aims to address one of the key problems:

Impersonality of user personas.

[...] We found that many design and UX practitioners in our study used them almost exclusively for communication and not design. These practitioners found personas too abstract and impersonal to use for design.

Matthews et al., p.1125

Research

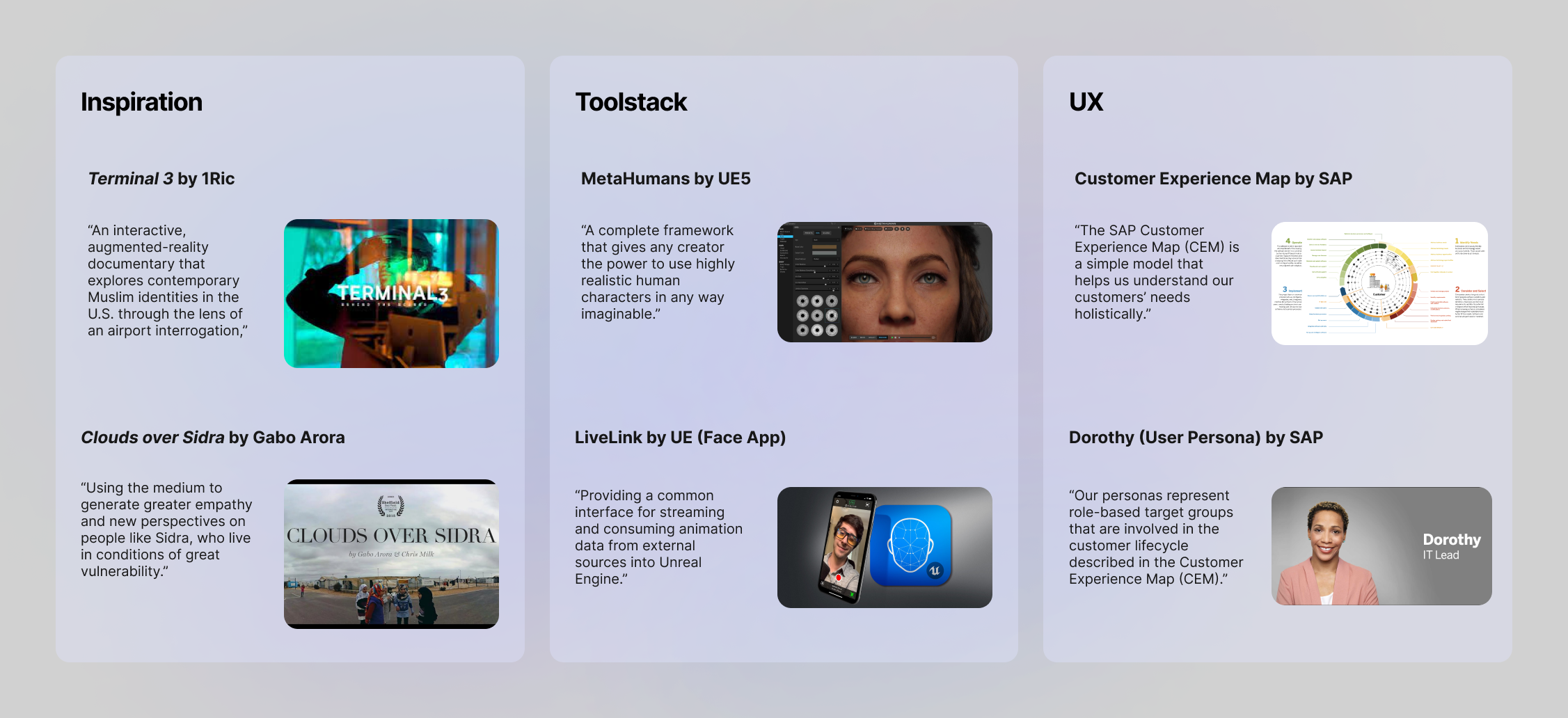

EMPATHYbuild is built upon four research pillars, most of which are academic research papers on the topics mentioned below. At the same time, it adopts UX and market research approaches for the purpose of establishing a design-first approach when it comes to providing value to the experience.

I. User-Centered Design and User Personas

II. Virtual Reality and the Metaverse

III. Virtual Reality and Empathy

IV. EMPATHYbuild Project-specific

SAP Customer Experience Map

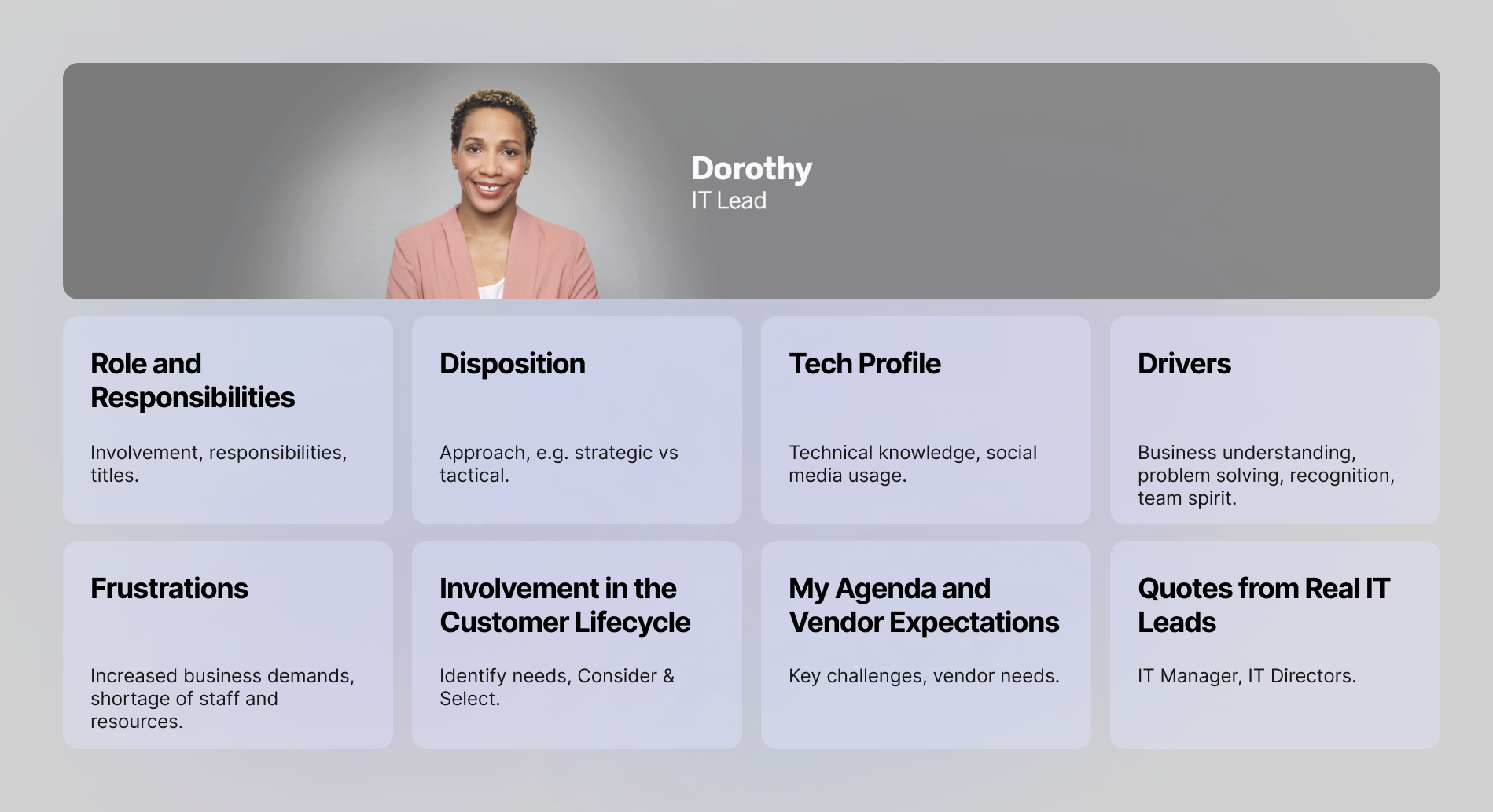

Persona with who the user in the experience has a conversation with is based on an extensive global research study done at SAP, which maps main SAP business customers as personas:

Customer Experience Map structures all main customer tasks and information needs along their journey with SAP. It is based on extensive, global user research and it truly reflects the customer perspective. This ensures that we stay focused on what our customers need and shape their experience with SAP accordingly.

Defining use cases →

After a small design thinking workshop which involved the main users/ stakeholders of the experience, I defined three different scenarios addressed by EMPATHYbuild.

UX/ Product Designer

To overcome problems of abstraction and impersonality of current user persona formats.

Product Manager

To get a clearer insight into the user needs for the sake of better product development aligned with UCD.

Software Developer

To get a clearer insight into the importance of solving specific problems within product implementation.

Prototyping

Creation of the VR experience required an interdisciplinary design/ production approach, where:

Dorothy, user persona from SAP's Customer Experience Map was put in a screen-written format in order to create a unifying narrative for different scenes, focusing on character development.

Design the experience environment (scene) based on who Dorothy is.

LiveLink app was used to create content and give voice and facial gestures to Dorothy.

Using Unreal Engine 5 and necessary SDKs, the scene was adapted to work for virtual reality.

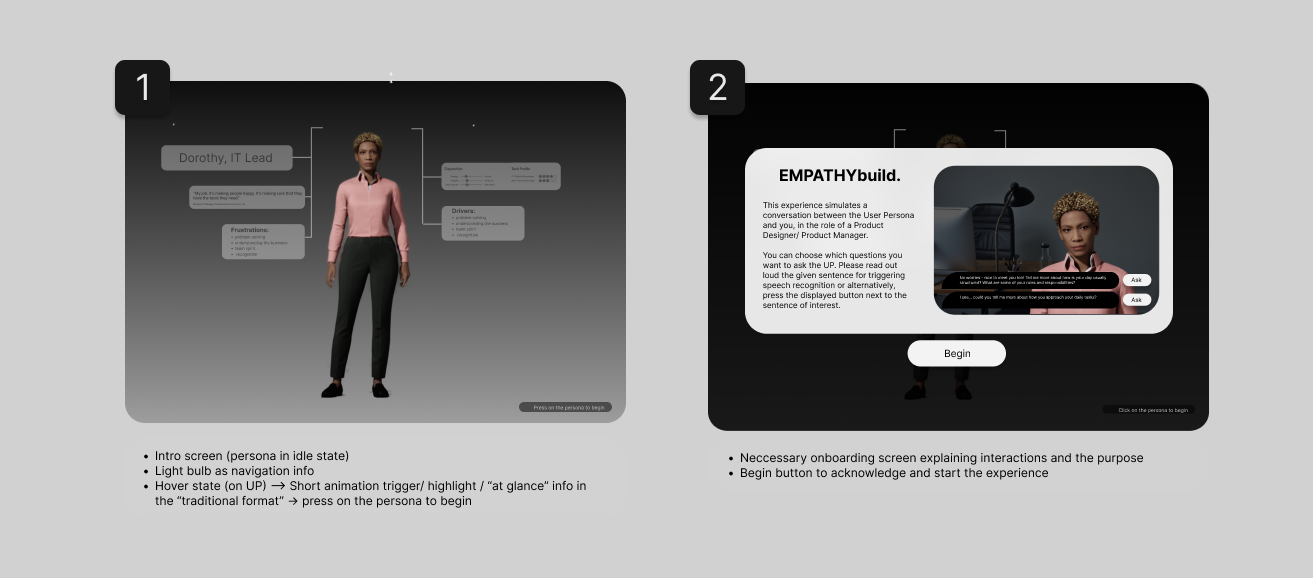

Interaction and UI design - define necessary interactions, onboarding elements, implement speech as the main interaction pattern.

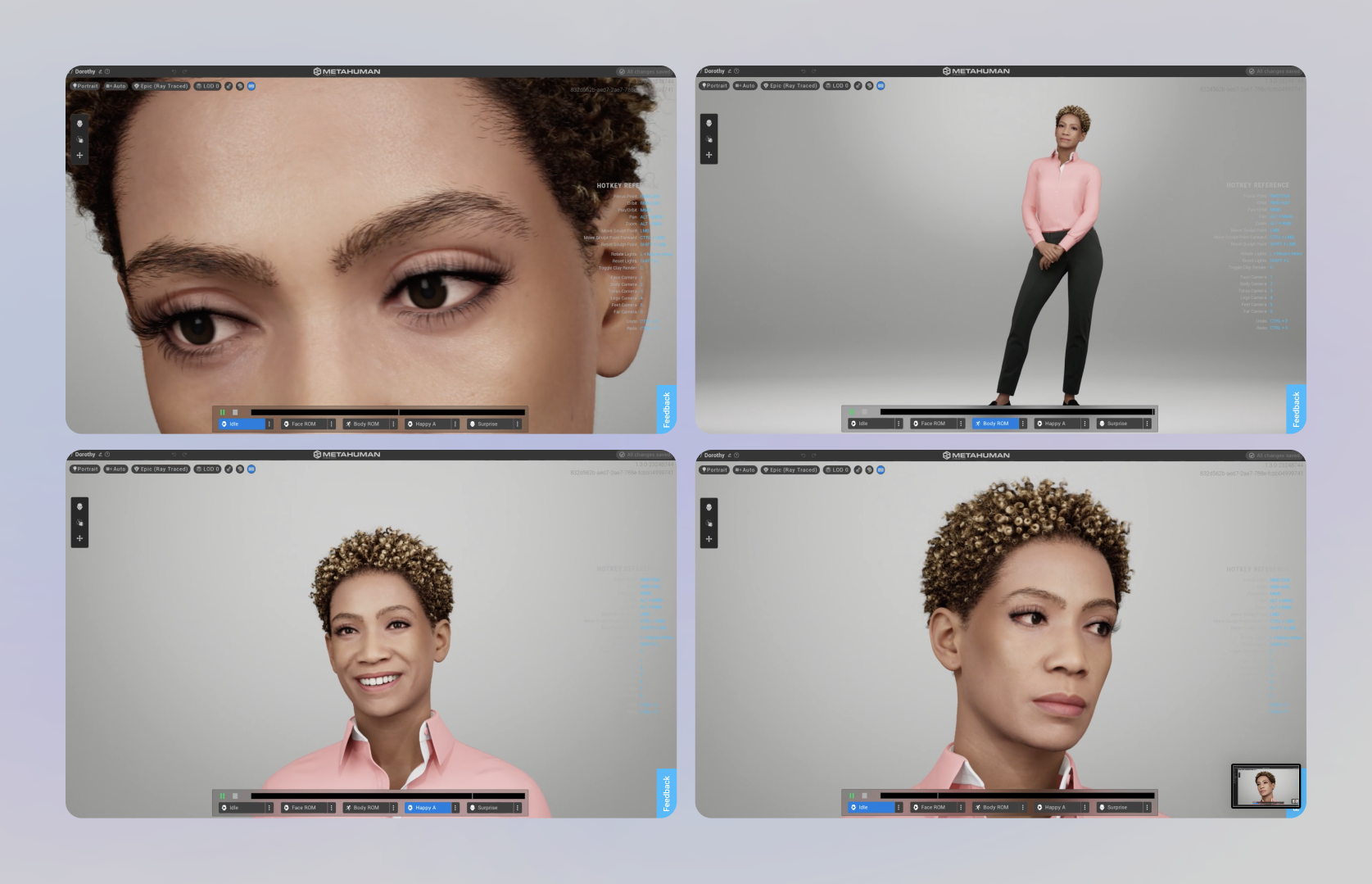

I used MetaHumans by Unreal Engine 5 in order to create Dorothy as a character in the experience and a suitable office scene with additional 3D models.

The process of adapting UP text-based information into a screen-based, interactive and story-driven character took place as part of a brainstorming process on creating an overarching story that Dorothy is a part of. I utilized my previous experience in the field of film and theater and developed a script that can be later discussed and refined with a voice actor who will bring Dorothy to life.

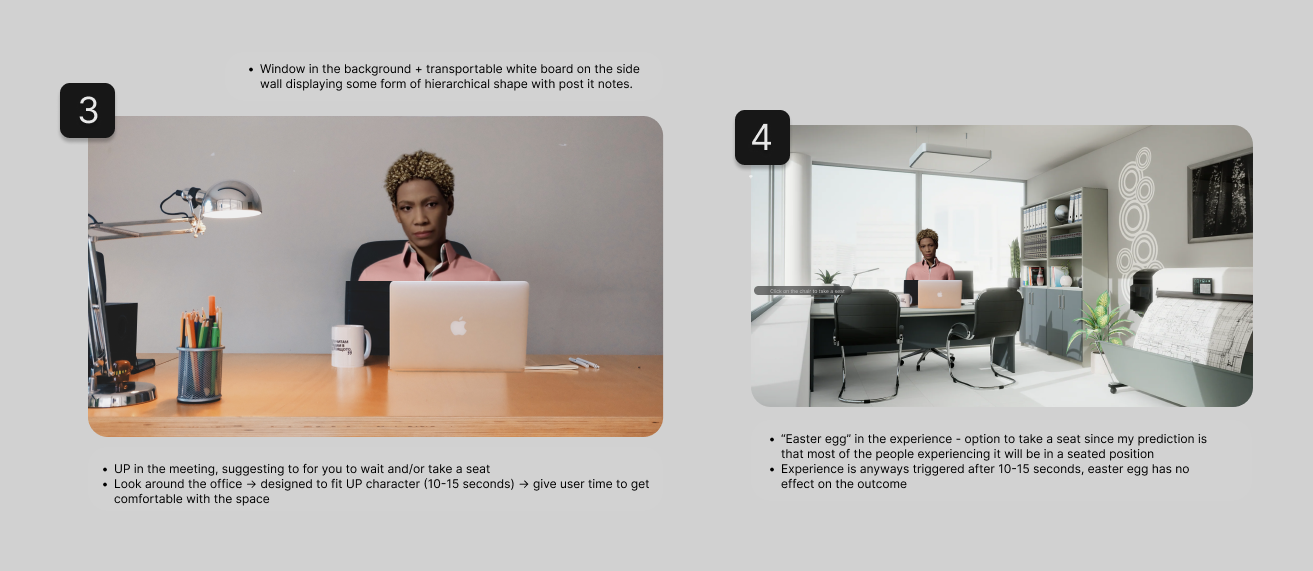

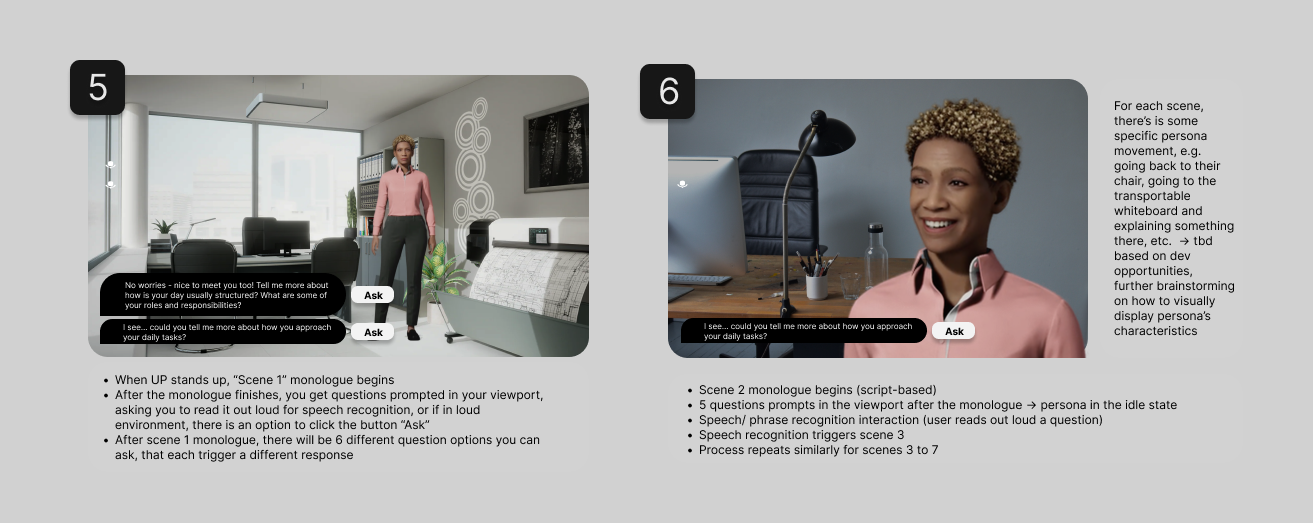

Storyboard/ interaction flow + wireframes

To visualize the experience and plan the interactions, I created storyboards and wireframes that mapped out the user journey through the VR environment and their interactions with Dorothy. This helped ensure a coherent narrative flow and identify potential usability issues before development.

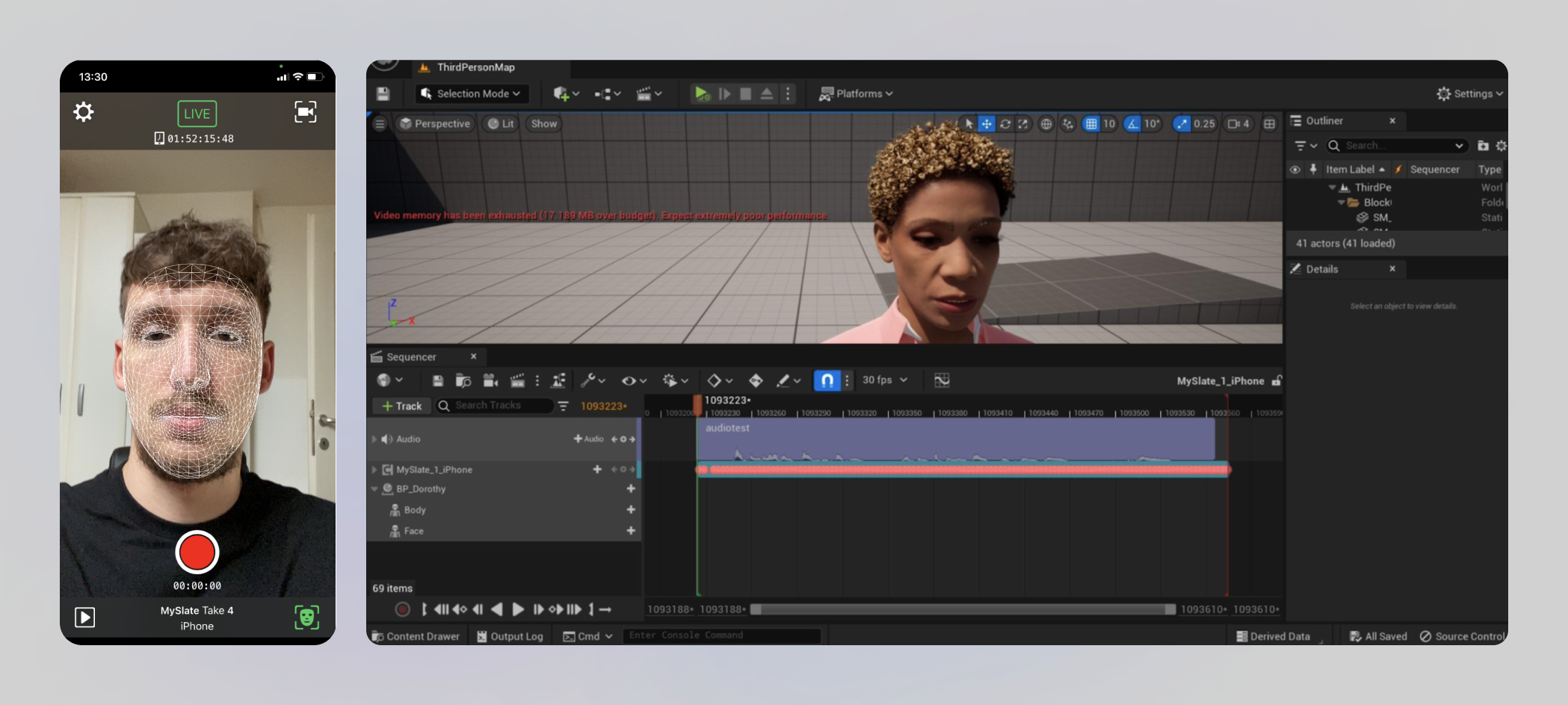

UE5 LiveLink for content production

Face animations and audio which gives a voice and personality to Dorothy were done via LiveLink Face App, specifically created to capture face animations and use them for production in Unreal projects, either as recordings or in real-time. For this prototype, we focused on using the recording feature of LiveLink Face App.

Alongside face animations with data stored in CSV format, it also generates a file in a video format such as MP4, which then can be converted via third-party converter into an WAV format (audio file supported by Unreal Engine) and used as voice audio.

Face animations and voice were recorded by my friend and actress, Jess.

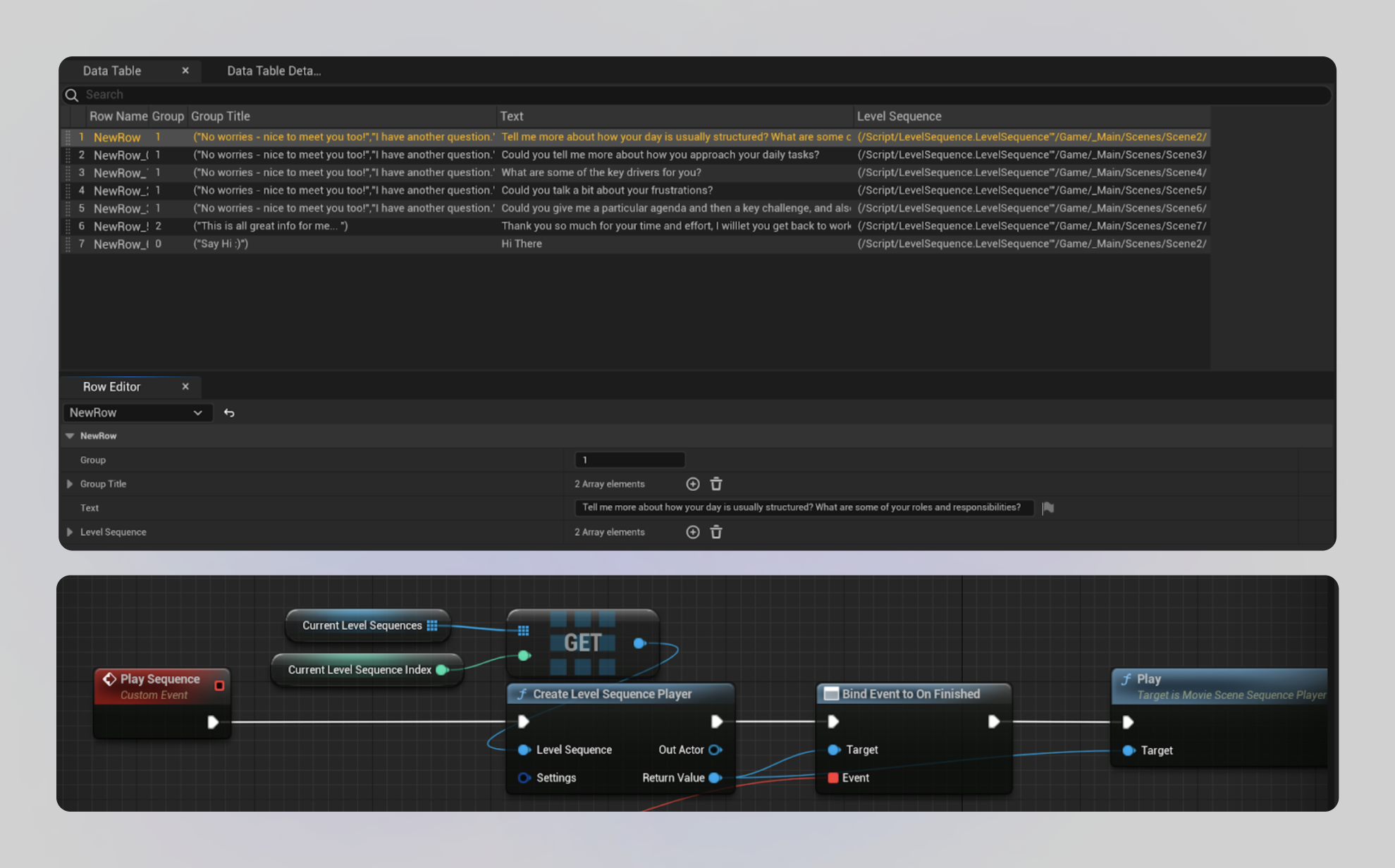

Interaction backend

The skeleton for the whole prototype is implemented with Unreal's Blueprint Visual Scripting. The process of implementing the interaction flow began with creating a data table containing questions which the user will ask to Dorothy, as well as sequences (Dorothy's answers) triggered by the matching questions, determined based on the script.

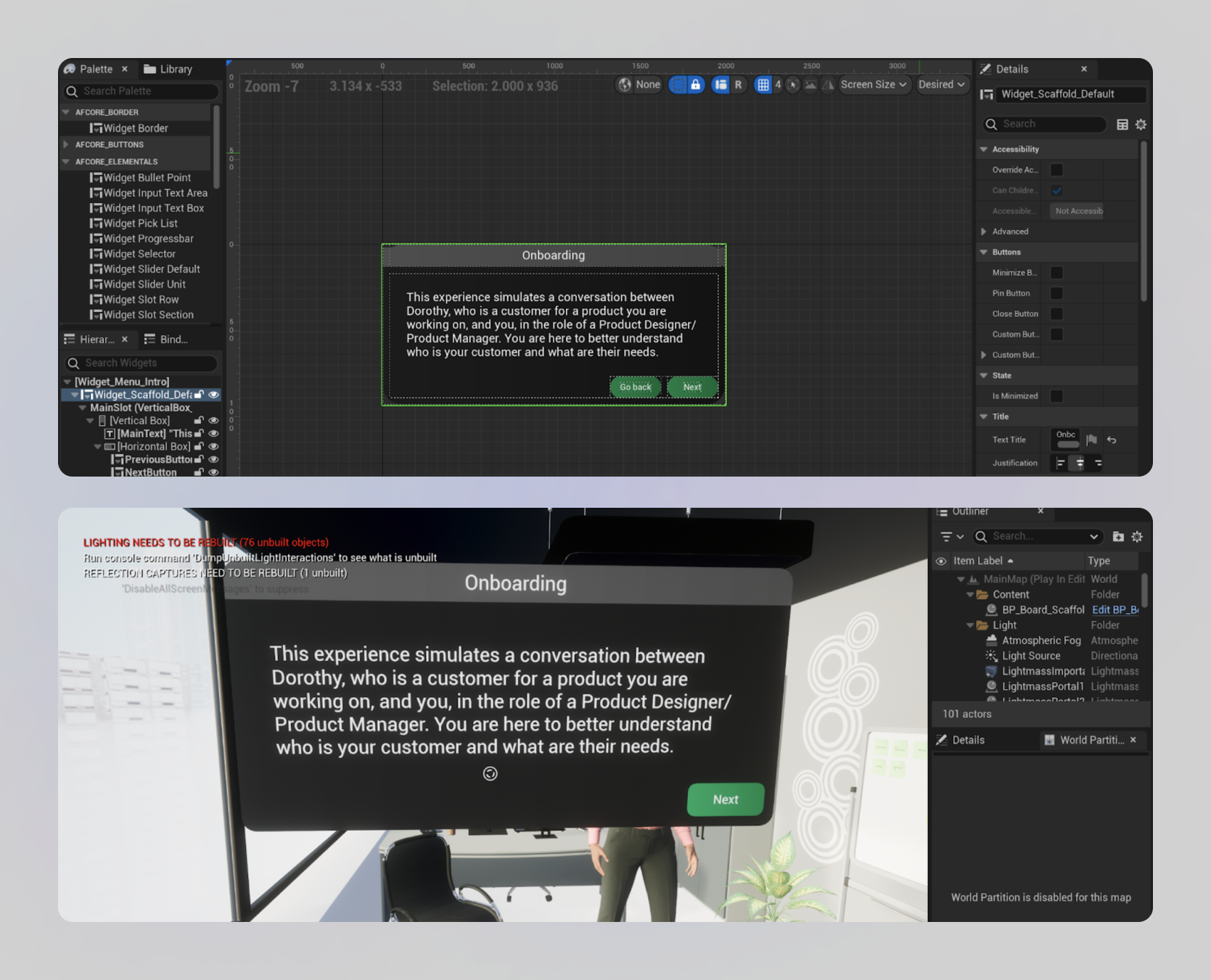

User interface

UI was implemented with Advanced VR Framework, as in-scene 2D screens with interactive buttons, initially designed in Figma. Advanced VR Framework is a plugin offering a variety of functionalities, one of them being a simplified way to build user interfaces for VR with interactive elements.

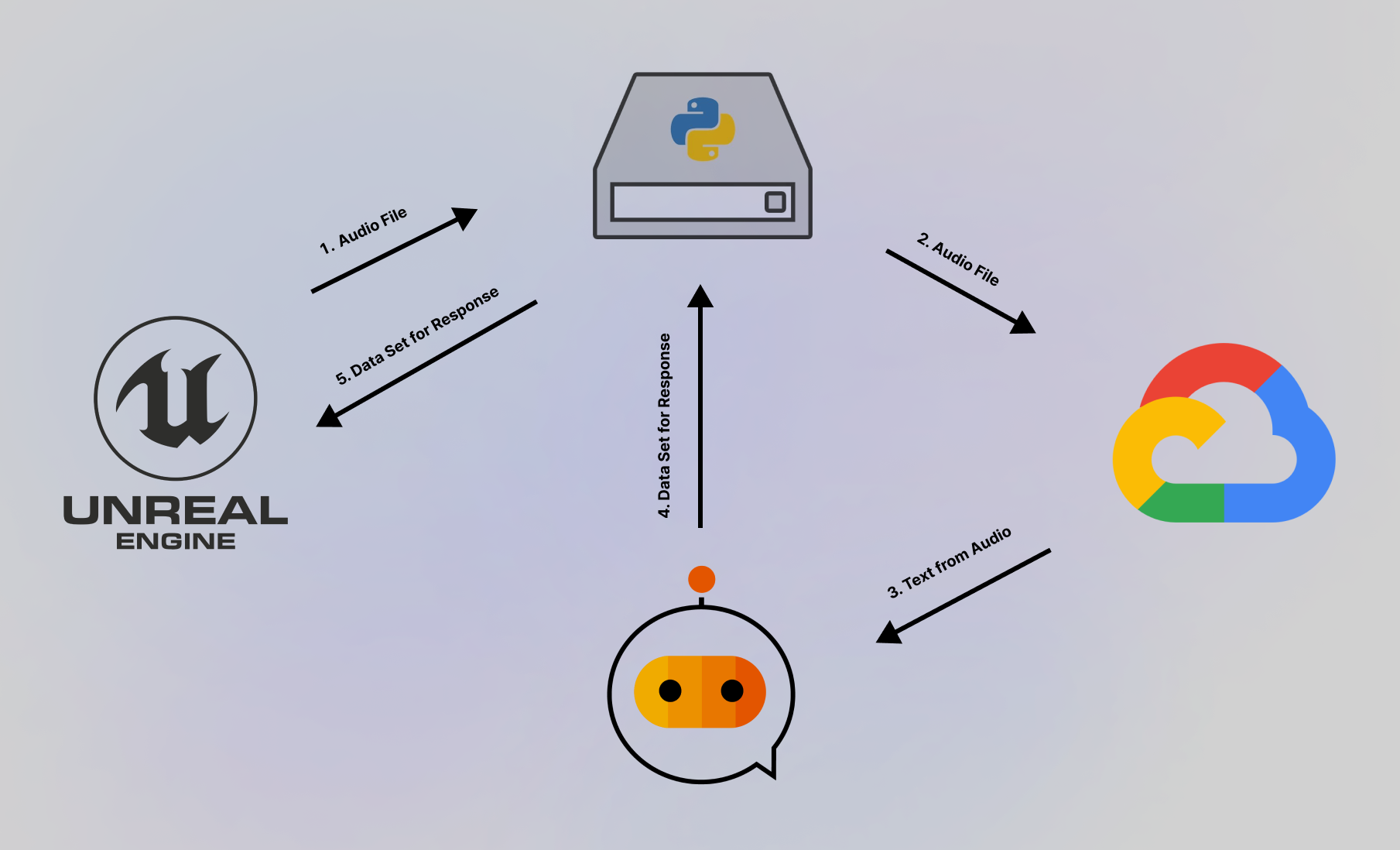

Speech recognition system

This image illustrates the speech recognition process, where via button push on the controller, the user triggers voice recording, which is in real-time recorded as an audio file that goes through a few services, including Google's speech-to-text service which transforms the audio file to a text-based format which is then sent to SAP Conversational AI, with the ability to trigger the linked response from Dorothy (the user persona), who then replies to the user based on the recognized question from the set of questions that a user can read from the UI.

Experience trailer/ Prototype demo

Testing

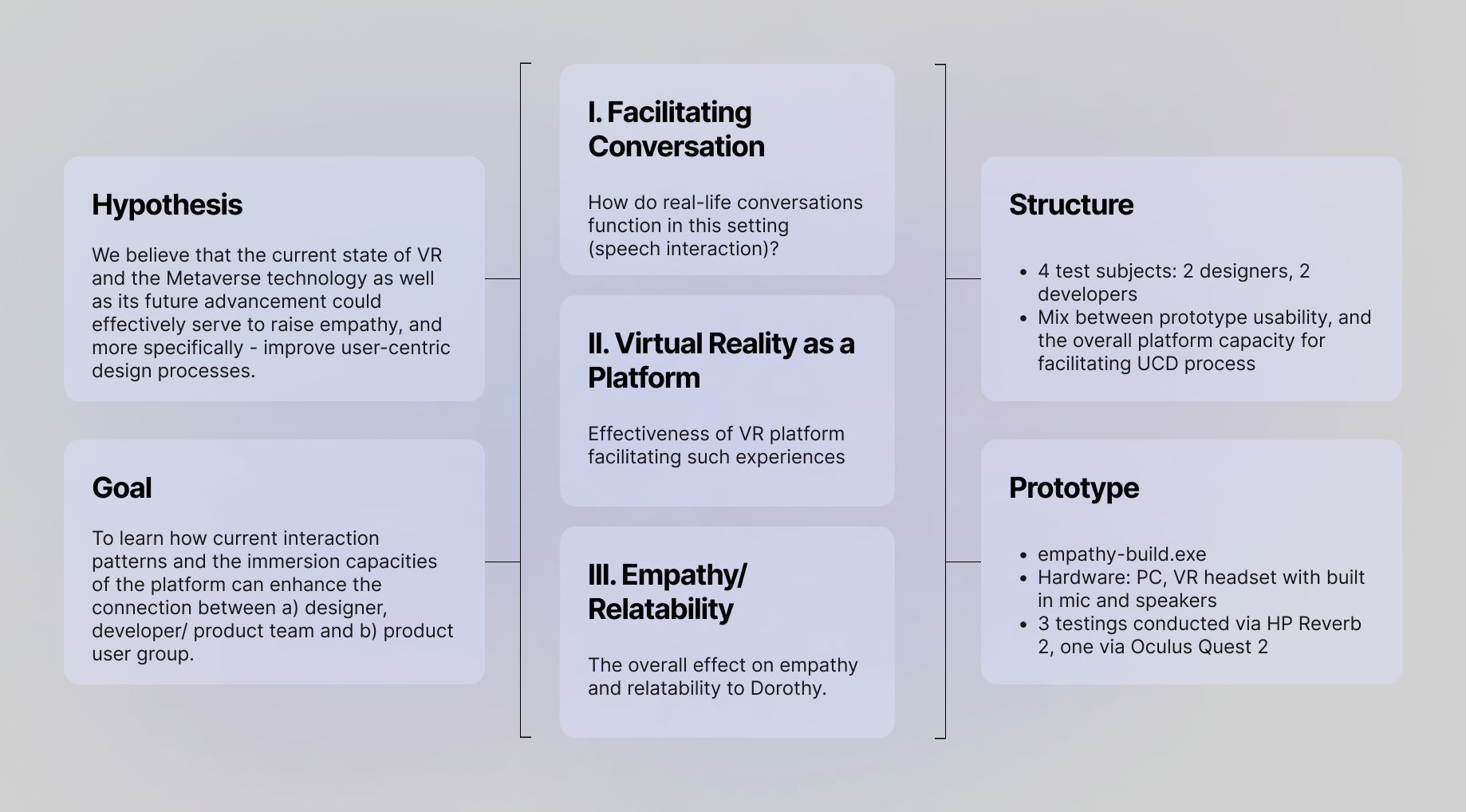

To evaluate the effectiveness of the EMPATHYbuild VR experience in enhancing empathy and improving user-centered design processes, a structured testing approach was developed. The testing plan focused on three key areas: conversation facilitation, VR as a platform, and empathy/relatability.

Image outlines the initial plan for user testing.

Test insights

| I. Facilitating Conversation | II. Virtual Reality as a Platform | III. Empathy/ Relatability |

|---|---|---|

Cons

| Cons

| Cons

|

Pros

| Pros

| Pros

|

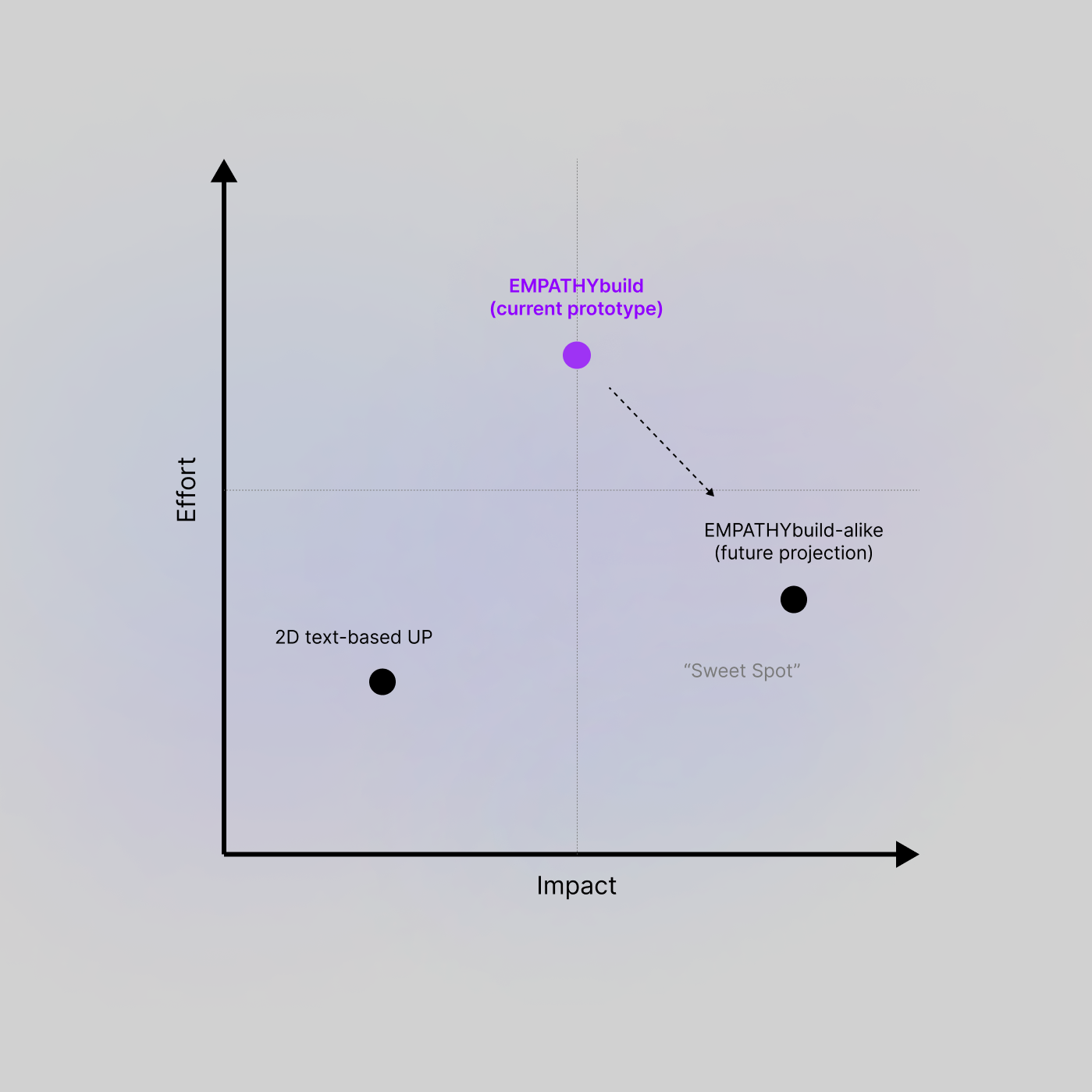

Impact vs effort

Based on the data synthesis, I crafted the impact vs effort matrix in the context of current user-persona creation tools, current EMPATHYbuild version, as well as the prediction of EMPATHYbuild type experiences in the future.